SAP Virtualization - A Look Under the Hood

![[shutterstock.com: 725365669, Gorodenkoff]](https://e3mag.com/wp-content/uploads/2020/07/shutterstock_725365669.jpg)

The advantages of virtualization in the SAP environment are well known. Cost reductions, shorter release cycles, greater flexibility and the ability to clone even large SAP systems within minutes to prepare and implement upgrades etc. are basically unbeatable arguments.

And yet many app owners doubt their importance, as they are primarily concerned with performance data during operation and protection against data loss.

And they are not wrong to do so, because after all, it is crucial that users can work productively with the various SAP apps and fulfill their business tasks.

A virtualized SAP landscape must therefore prove that the concerns of application managers are unfounded. This also applies to SAP systems, including Hana, S/4 and NetWeaver environments, which are based on HCI software from Nutanix.

It is therefore worth taking a look "under the hood", so to speak, to better understand that the advantages of virtualized SAP landscapes outlined above do not come at the expense of performance during operation.

In the Nutanix software, any combination of applications can be operated in your own data center and these can also be scaled to levels that are otherwise only possible when operating in a public cloud.

The platform delivers very high values in terms of input/output throughput (measured in IOPS, i.e. input/output operations per second) and at the same time only very low delays in response times.

Measurements at Nutanix have shown that it is better to increase the number of virtual machines (VMs) with databases to take full advantage of the platform's performance, rather than deploying a large number of database instances in a single VM.

In terms of input/output throughput, Nutanix delivers the necessary throughput and transaction requirements of even very complex transaction and analytics databases, including Hana, via its Distributed Storage Fabric (DSF).

Scaling and data locality

The entire architecture of the Nutanix hyperconverged software infrastructure follows a "web-scale" approach (see Figure 1, page 47): Nodes with very high computing power, i.e. servers, are used. Standard hypervisors operate on the nodes.

These include VMware's vSphere/ESXi, Microsoft's Hyper-V or the Nutanix AHV hypervisor certified for NetWeaver, Hana and S/4. The hardware of such a node consists of processors, RAM and local storage components - these can be flash-based SSDs and/or SATA hard disks as well as NVMe storage with high storage capacities.

Virtual machines run on each of these nodes, just like a standard host system for VMs. However, a control VM is also used for each node, which provides all Nutanix-relevant functions.

This intermediate layer forms the DSF and is therefore responsible for ensuring that all local storage components are made available to all nodes in the form of a standardized pool, so to speak.

You can also think of the DSF as a kind of virtual storage device that offers the local SSDs and hard disks to all nodes (via the NFS or SMB protocol) for storing the data from the various VMs. The individual VMs in a cluster write the data to the DSF as if it were a normal shared storage device.

The DSF has an important task here: it always stores the data as "close" as possible to the VM in question. This is achieved by storing the data as locally as possible: If a VM is running on node A, the associated data from this VM is usually also stored on node A.

The result is that the highest possible access speed to the data is achieved without high costs for the storage components.

This principle of data locality is supplemented by redundant data storage if the data is to be highly available and is therefore stored on several nodes.

Thanks to this concept, the infrastructure based on Nutanix HCI software scales linearly from a configuration with just three nodes up to any number of nodes.

This brings great advantages for companies, as adding further nodes allows the network to grow extremely quickly with just a few clicks, without having to think about connecting the nodes to each other.

DSF also offers a variety of important data management functionalities, such as cluster-wide "data tiering", in which data is automatically moved to faster or slower storage devices depending on the frequency of access - "hot data" versus "cold data".

Data flow and memory functions

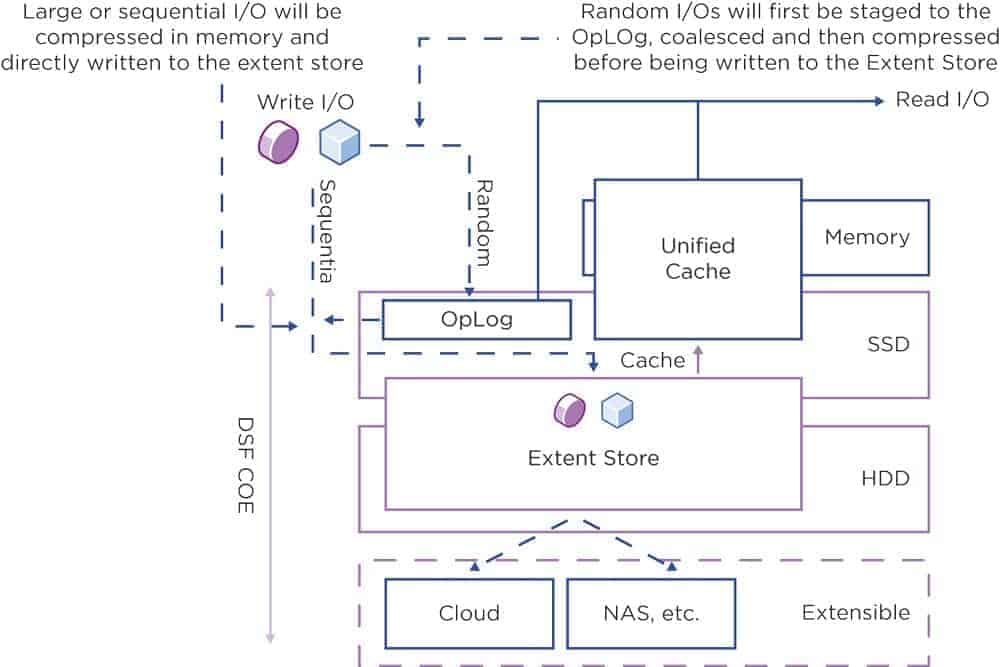

If you want to sketch the input/output path when accessing the data in the DSF, you will recognize three logical areas: the OpLog, the Extent Store and the Content Cache (also known as the Unified Cache; see Figure 2, page 48).

The OpLog area lies above the SSD and NVMe storage level, so to speak, while the extent store covers part of the SSD and NVMe and part of the hard disk storage. The Content Cache, on the other hand, lies above the SSD, NVMe and RAM areas.

The OpLog area acts as a write buffer for the input/output jobs (comparable to a file system journal) that are output by the VMs on a node, for example. These I/O jobs are therefore stored in the SSD-based memory area.

This means that these write jobs are processed at high speed. They are also replicated from the OpLog to other nodes in a cluster. This ensures reliable data availability - even if a node fails completely, for example.

The OpLog area also allows burst-type random write requests to be accepted and merged with other write requests in order to then transfer them sequentially to the "Extent Store". This area includes SSD, NVMe and hard disk storage and all storage actions are also executed here.

The Extent Store contains the ILM, the Information Lifecycle Manager. This is responsible for finding the optimum storage space for the data. This decision is made depending on the input/output action pattern and the frequency of access.

The ILM stores older or "cold" data that is no longer accessed frequently in the hard disk area. This frees up space on the more expensive SSD and NVMe level, where newer - and "hotter" - data can then be stored.

However, the OpLog area can also be bypassed. This makes sense if workloads with a sequential access pattern (i.e. no "random IOs") access the memory.

Combining several write jobs would not bring any improvement here - therefore, writing is done directly to the extent store.

In the read path, the content cache is particularly noteworthy. It stores the data in a deduplicated manner - even if different VMs access the same data, the content store therefore only holds one instance of this data.

In addition, the content store is located partly in the main memory and partly in the SSD and NVMe area. This means that all read accesses to the data can usually be fulfilled.

However, if the data is not in the content cache during a read operation, the system retrieves it from the extent store and therefore possibly from the hard disks. With each subsequent access to these data areas, however, the read access is then covered by the content cache.

An important storage function is data compression. Nutanix uses this functionality to increase data efficiency on the drives. The DSF offers both inline and post-process compression.

This means that the optimum method is used depending on the requirements of the applications and data types: With the inline approach, sequential data streams or large input/output blocks are compressed in the working memory before they are written to the hard disks.

In post-process compression, on the other hand, the data is usually initially stored uncompressed. Only then does the Nutanix node's curator framework, which is based on the MapReduce algorithm, take care of cluster-wide data compression.

If inline compression is used for random I/O write accesses, the system first writes the data to the OpLog. There it is summarized and then compressed in the working memory before being written to the extent store.

Reliability

Another important storage function is the redundant storage of data: it must be protected against the failure of a node and therefore stored on multiple nodes. Nutanix has the replication factor, or RF for short, which can also be understood as a resilience factor.

Together with a checksum for the data, this guarantees data redundancy and high availability even if nodes or hard disks fail.

The OpLog plays an important role here as a persistent write buffer: It acts as a collection point for all incoming write accesses to the SSD area, which is characterized by short access times.

When data is written to the local OpLog of a node, this data can be replicated synchronously to one, two or three other OpLogs on CVMs of other nodes, depending on how high the RF is set. CVMs are the controller VMs of Nutanix, a control center of the HCI software.

Only when a write confirmation is received from these OpLogs is the write access considered to have been successfully completed. This ensures that the data is available in at least two or more independent locations. As a result, the goal of fault tolerance is achieved.

The replication factor for the data is configured using the Nutanix Prism management console. All nodes are included in the OpLog replication so that there are no "particularly loaded" nodes - i.e. the additional write load due to replication is distributed in the best possible way. This means that the entire construct also achieves corresponding linear scaling in terms of write performance.

When the data is written to the OpLog, the system calculates a checksum for this data and saves this checksum in the metadata. The data from the OpLog is then sent asynchronously to the extent store and is then available there - replicated as often as required depending on the desired RF, as each OpLog writes its information to the extent store.

If a node or even just one disk fails, the data is replicated again among the remaining nodes in the cluster so that the desired RF is maintained again.

When this data is read, the checksum is calculated and compared with the value stored in the metadata. If the checksum does not match, the data is read from another node - where the checksum matches the desired data. This data then replaces the non-matching data. This ensures that the data is valid.

Storage tiering

An important task of the DSF is to aggregate the memory capacity of all nodes in a cluster. The entire storage area is made available for all nodes. This "disk tiering" is made possible by special "tiering" algorithms.

With their help, the SSDs and hard disks in a cluster are available to all nodes and DSF's Information Lifecycle Management (ILM) ensures that the data is stored on the SSD or hard disk level - depending on how often it is accessed. These special storage events are triggered automatically and the data is then moved accordingly.

In order to comply with the principle of data locality in storage tiering, the following applies: Even if all SSDs in a cluster are in principle available for all nodes in the network, the SSD layer in the local node still has the highest priority for local input/output operations.

This procedure is extended if a defined RF specifies that data is to be stored with high availability - i.e. on multiple nodes. As the DSF combines all SSDs and hard disk drives into a cluster-wide storage layer, the entire aggregated storage capacity of this logical storage is available for each node - whether local or remote. All actions in the course of ILM also take place without the administrator having to perform any explicit actions.

With this technology, DSF guarantees that the storage devices are utilized uniformly and efficiently within the individual tiers. If the SSD area of a local node is full, DSF balances the device utilization: The less frequently accessed data is moved from the local SSD to other SSDs in the cluster.

This frees up SSD space on the local node. This means that the local node can write to the local SSDs again and does not have to access the SSD area of another node via the network. All controller VMs and the SSDs support remote input/output operations.

This avoids bottlenecks and prevents disadvantages if the input/output operations have to run over the network.

Performance

Thanks to the Nutanix architecture and technology, the highest performance data can also be achieved in a completely virtualized SAP landscape, and in its entirety. This is because, according to SAP, it is not about individual parameters, but about overall performance.

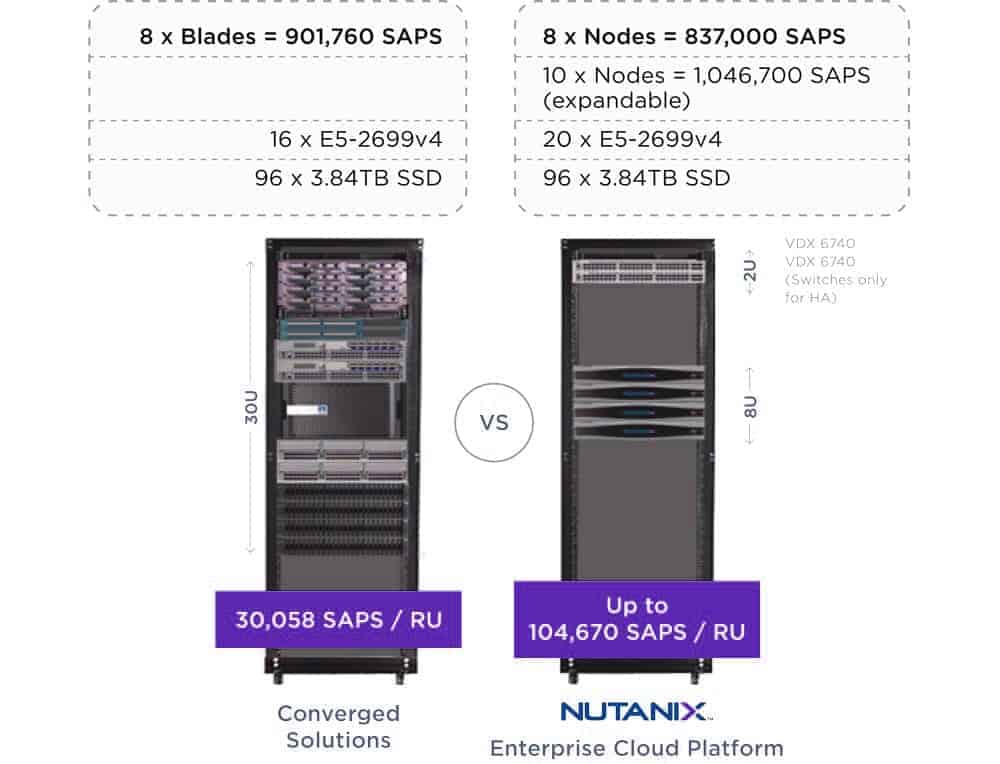

The Walldorf-based company measures this in a standardized unit, the SAP Application Performance Standard (SAPS). This unit is a hardware-independent measure. It describes the performance of a system configuration in an SAP environment and is derived from the Sales and Distribution Benchmark.

According to this benchmark, 100 SAPS are defined as 2000 order items that are fully processed in one hour. The performance density derived from the SAPS unit can be used to illustrate the performance of a fully virtualized SAP environment based on Nutanix in comparison with conventional implementation options:

For example, hyperconverged Nutanix nodes can achieve 104,670 SAPS per rack unit, while a converged solution equipped with comparable computing and storage resources achieves 30,058 SAPS per rack unit.

In other words, to achieve the same performance, a fully virtualized SAP environment based on Nutanix requires only a third of the space in the data center (see Figure 3, below). In view of the features of Nutanix infrastructure software, which are by no means exhaustive, concerns about virtualized SAP environments no longer seem justified.

This finding gives SAP decision-makers the freedom to align their infrastructure strictly according to business criteria. Lower infrastructure costs of up to 60 percent, reduced power and cooling requirements of up to 90 percent, a radical reduction in administrative effort through automation, greater freedom in the choice of hardware offerings and compatibility with common public cloud stacks for hybrid scenarios, etc. - these are all important advantages that can only be achieved to a very limited extent with traditional infrastructures.

Virtualized SAP environments based on HCI software from Nutanix not only help to save costs in the short term, but also reduce the total cost of ownership of current and future SAP environments and facilitate the transition to the new product generation from Walldorf.

Nutanix customer Valpak

Valpak accelerates traditional and online business

Following the acquisition of Savings.com by Valpak, the UK compliance company needed a simpler and more cost-effective infrastructure to support workloads such as SAP, Oracle and SQL Server. At the same time, the IT team should not grow too much and become more agile without compromising stability. Performance was also to increase. By using infrastructure software from Nutanix, Valpak:

- accelerate its deployments by a factor of 10

- increase performance by a factor of 8

- Reduce administration time from 180 hours per month to just 2 hours

- cut its capital expenditure by a tenth and has since allowed its IT infrastructure to grow in line with demand

Nutanix customer ASM

ASM creates batch reports 5 times faster than before

ASM International, based in the Netherlands, is a leading supplier of wafer manufacturing equipment to the semiconductor industry. ASM was looking for a replacement for its outdated infrastructure to replace slow batch processing with a faster one. They also wanted to relieve their IT operations team from the constant issues with the hardware supporting applications as diverse as SAP ERP, CRM, SCM, PI, BW, BOBJ, PLM, SRM, PORTAL, etc. With the help of the Nutanix Enterprise Cloud, ASM:

- increased the speed of batch processing by a factor of 5 to meet growing market requirements

- reduced the time required for operation by almost a third

- improved its system landscape by between 50 percent and 500 percent, depending on the area

- consolidated the virtual machines for SAP and databases, thereby saving license costs

Nutanix customer: Multinational company from the food industry

Multinational food company modernizes SAP landscape

A well-known food company was looking for ways to implement new business projects faster than before. However, the time required for new deployments on the existing infrastructure with workloads such as Electronic Data Interchange (B2BI), Order-to-Cash (SAP NetWeaver and B2BI), SAP Project Staging (CRM, BW applications) and other applications was too great. As a result, the increasingly ambitious business goals could no longer be met. In addition, the company was suffering from a lack of space in its data centers, needed to get a grip on ongoing storage costs and was looking for a solution that would allow it to start implementing its cloud strategy immediately. The IT team set about rebuilding its huge UNIX environment using virtualized Linux servers running on the Nutanix Enterprise Cloud. The results were not long in coming:

- 40 percent lower

- Total cost of ownership (TCO)

- massive reduction in the

- Total footprints

- Dismantling of the

- SAN infrastructure

- More agile implementation and distribution of new deployments

- slimmed down

- Upgrade processes

- a clear

- Cloud roadmap