The Journey From Idea to Production

It's no secret that every company in the world is working to realize the potential of AI, and generative AI in particular. Companies know that AI can increase efficiency, reduce costs, improve customer and employee satisfaction, create competitive advantage and automate business processes. All of this is possible as the technology becomes more readily available and accessible to companies of all sizes. Thanks to numerous deployment options and different hardware and software combinations, access to AI and ML technologies has become easier.

Companies also no longer need full AI/ML deployment to be able to use certain functions such as cloud-based AI services or AI-supported business tools. As a result, investments in AI technologies are set to increase. However, the AI market is currently very fragmented. In this environment, companies often find it difficult to choose the right path. Above all, they are faced with the challenge of bringing AI models into production efficiently and safely. Even companies that have successfully completed their first AI projects are hesitant or fail to achieve a comprehensive transformation. There are numerous reasons for this.

Many companies fear a vendor lock-in and see the dangers and risks of AI technology. Others, on the other hand, see the requirement-specific adaptation of AI models as a difficult task to solve. In view of these challenges, many AI projects remain stuck in the pilot or proof-of-concept phase.

Red Hat's approach to AI and ML engineering is 100 percent open source-based.

The right AI model

Only a strategic approach that raises and answers fundamental questions before the introduction of AI can ensure success. Above all, this includes selecting the right AI models and the appropriate platform or infrastructure basis.

There is a clear trend when deciding on a specific AI model. More and more companies are opting not for LLMs, but for smaller models designed for specific use cases. The problem with using larger models - with billions of parameters - is that while they have extensive generic knowledge, they are not particularly helpful when a company wants to create a chatbot for its customer service staff or help customers solve a specific problem with a product or service. Compared to LLMs, smaller models offer numerous advantages. They are easier to implement and enable the continuous integration of new data - especially company and domain-specific data. This means that training runs can also be carried out much faster with them.

The models also contribute to significant cost savings, as large LLMs require considerable investment. Last but not least, by using smaller models, companies can also reduce their dependence on large LLM providers, whose solutions often represent a black box in terms of algorithms, training data or models. In general, there is currently a trend in the industry towards providing smaller and optimized AI models anyway. One example of this is the vLLM (Virtual Large Language Model) approach. This allows calculations to be performed faster with an inference server, for example through more efficient use of GPU memory.

Project InstructLab

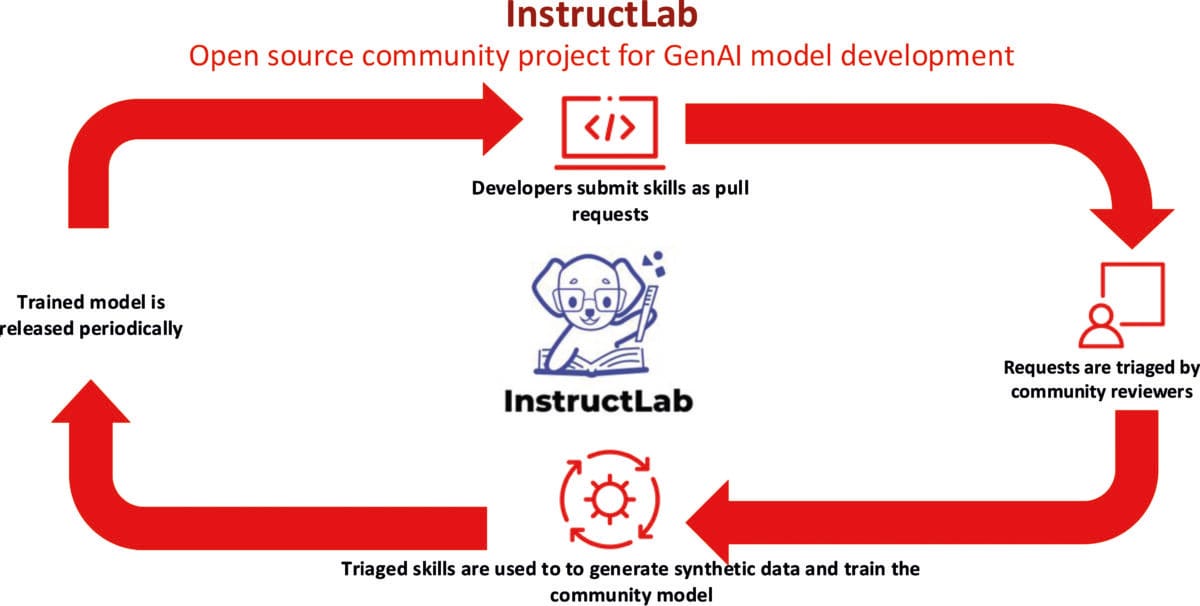

However, generic LLMs, which have varying degrees of openness in terms of pretraining data and usage restrictions, can now also be extended for a specific business purpose. To this end, Red Hat and IBM have launched the InstructLab community project, which provides a cost-effective solution for enhancing LLMs. The solution requires far less data and computing resources to retrain a model. InstructLab significantly lowers the entry barrier to AI model development - even for users who are not data scientists.

In InstructLab, domain experts from various fields can contribute their knowledge and expertise and thus further develop an openly accessible open source AI model. It is also possible to use the RAG (Retrieval Augmented Generation) technique to further optimize a model agreed with InstructLab. RAG generally offers the option of supplementing the data available in an LLM with external knowledge sources with current real-time data, contextual, proprietary or domain-specific information, such as data repositories or existing documentation. This also makes it much easier to ensure that the models provide correct, reliable answers without hallucinating.

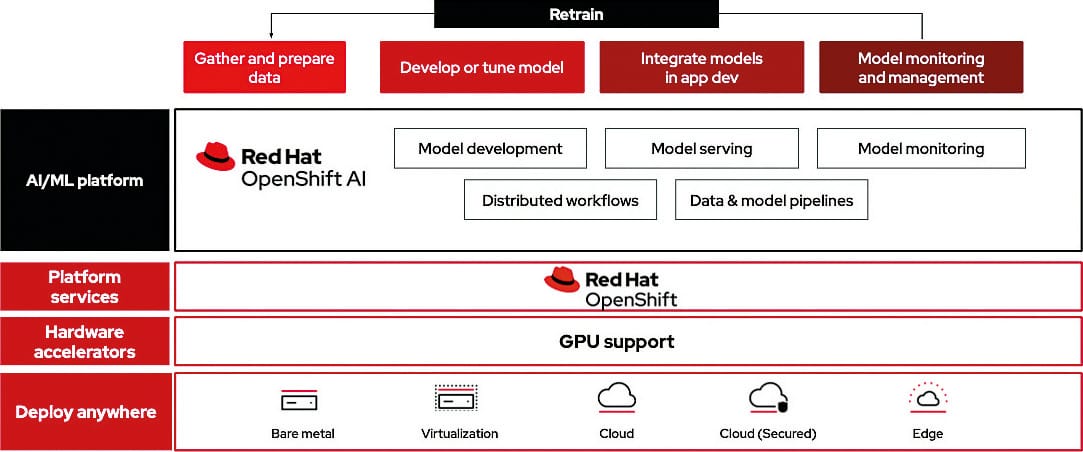

Red Hat OpenShift AI, a flexible, scalable AI/ML platform, enables organizations to develop and deploy AI-powered applications in hybrid cloud environments.

Open source AI

There is no question that companies must increasingly address new topics such as AI and ML in order to remain competitive and future-proof. However, unconditional and uncritical use is not justifiable. Security, stability and digital sovereignty must always be guaranteed.

Sovereignty is primarily about technology, operation and data. Companies should ensure vendor independence, both in terms of the choice between proprietary and open source components and with regard to flexible deployment options. Data sovereignty means that companies know where and how their own data is stored and processed.

In the context of these sovereignty requirements, transparent open source principles, technologies and solutions are becoming increasingly important. Especially when AI is used in business-critical areas, a high degree of traceability and explainability must always be guaranteed. After all, companies do not want a black box, but an AI that is trustworthy and explainable and that observes legal and ethical principles. The value of tried-and-tested open source processes, which stand for a high level of transparency as in the area of software development, is particularly evident during implementation.

However, in the AI sector, as in software development in general, there is also a risk of open source washing, as a comparison of the core elements of open source software and open source LLMs shows. Open source software is characterized by complete transparency, comprehensible algorithms, visible error handling and the possibility to drive further development with the participation of the community. In contrast, many of the so-called open source LLMs are generally characterized by free availability, but they offer little insight into aspects comparable to software development, such as training data, weightings, model-internal guard rails or a reliable roadmap.

From a business perspective, however, traceability and data basis are of fundamental importance, if only for liability or compliance reasons. Trustworthy AI is also essential in many cases due to the high regulatory requirements resulting from the GDPR or the EU's AI Act, for example. An open source approach is the right basis for this, as it offers transparency, innovation and security. However, it must be genuinely open source and fulfill central open source criteria - including with regard to training data or AI models - and not just open source washing.

The right platform

Whichever way a company decides to go - using an LLM or several smaller models: The platform used is always an important part of the AI environment. Many companies still rely on their own infrastructures and on-premises servers. However, this approach limits the flexibility to introduce innovative technologies such as AI. However, the cloud market has now evolved and offers the best of both worlds: the hybrid cloud. It enables companies to continue using on-premises storage for sensitive data while at the same time taking advantage of the scalability benefits of the public cloud.

InstructLab is an open source project for improving LLMs. The community project launched by IBM and Red Hat enables simplified experimentation with generative AI models and optimized model adaptation.

Hybrid cloud for AI

A hybrid cloud platform is therefore also an ideal infrastructure basis for secure AI implementation, from AI model development and AI model training to AI model integration in applications and the operation of modern MCP (Model Context Protocol) and A2A (Agent to Agent) services. The hybrid cloud offers much-needed flexibility in choosing the best environment for the respective AI workloads. There are two possible uses for companies. They can develop and train an AI model in a public cloud on GPU farms with clear client separation, also using publicly available data and synthetic test data, and then embed it in the on-premises application. Finally, it should also be noted that it is generally not possible for companies to set up their own cost-intensive GPU infrastructure. Conversely, they can also train the models with confidential data in their own data center and then operate them in a public cloud.

MLOps (Machine Learning Operations) functionalities are an important component of a platform designed for AI use. MLOps is similar to the established DevOps concept, although the focus is on the provision, maintenance and retraining of machine learning models. Above all, MLOps contributes to effective and optimized collaboration between data science, software development teams and operational areas. It bridges the gap between the development of ML models and their use in a production environment. MLOps practices aimed at simplification, automation and scaling thus also contribute to a significant acceleration of the production roll-out.

To reduce operational complexity, a platform should also offer advanced tools for automating deployments and self-service access to models and resources. This allows companies to manage and scale their own environments for training and deploying models as needed. It is also important that tested and supported AI/ML tools are available.

Last but not least, a platform approach also ensures that industry partners, customers and the wider open source community can cooperate effectively to drive open source AI innovations forward. There is immense potential to expand this open collaboration in the field of AI, especially in the transparent work on models and their training.

AI productive use

The world of AI can thus be further opened up and democratized. For AI users, the openness of the platform to a hardware and software partner network is a key advantage: it gives them the flexibility they need to implement their specific use cases. Such flexible and hybrid platforms for AI production use are now available, as the example of Red Hat OpenShift AI shows. This allows a company to avoid vendor lock-in, keep pace with new AI innovations and implement all possible use cases. It supports model training and fine-tuning as well as the provision and monitoring of all models and AI applications, whether in the cloud, at the edge or on-premises. Flexibility and compatibility are ensured by a modular structure and plug-and-play functionality with open source components and other AI solutions. With such a solution foundation, companies can gradually and easily drive forward the introduction of AI and thus also keep costs under control.

Many companies are still in an evaluation or proof-of-concept phase when it comes to introducing AI. Even though there is currently a lot of focus on large language models and generative AI, one thing should not be forgotten: Although the transfer of large-scale AI into the production environment is a rather new topic, it by no means only affects LLMs, but also predictive or analytical AI, for example. Here, too, there are challenges that can ultimately only be overcome with a platform approach.

Continue to the partner entry: