Quantity and Quality

Finally, the master data had to be prepared for the migration. At the same time, relevant duplicates in the master data can be identified and avoided with the right strategy. How should companies proceed here? SAP is not only giving companies an ultimatum for migration to S/4 by ending manufacturer support for previous versions. SAP is also pushing migration to the cloud.

The DSAG Investment Report 2022 shows that 32 percent of companies are using S/4 on-premises, six percent are using S/4 in the private cloud and two percent are using it in the public cloud. Companies are showing reluctance to fully migrate, according to the report, as it appears that just under half of companies using S/4 on-premises are still using ERP/ECC 6.0 or Business Suite 7 in parallel. This indicates that there is not yet enough confidence in a complete replacement with the new system.

Unfortunately, the cloud-first strategy and the changed data model all too often still encounter data silo structures that have grown over the years. An IDC study in 2020, for example, revealed that companies maintain an average of 23 data silos. A Barc study from the previous year shows that 65 percent of the study participants have still not fundamentally changed this situation. On the contrary, the number of data silos is actually continuing to rise. This is neither helpful for a complete migration nor for hybrid use of different systems, but makes the inevitable replacement even more difficult.

The DSAG report also shows that investment behavior for S/4 is slightly down on the previous year. Companies should take advantage of this time to improve the quality of their master data and maintain it over the long term. After all, only with assured high data quality can the replacement of the legacy system succeed and the added value of the new solution be fully exploited.

Like a fake fifty

Companies are well advised to transfer their master data to the new data model before migrating to S/4 and to assign the entities correctly. However, data silos that still exist, as well as name or address changes and human input errors, repeatedly result in duplicate or even multiple data records. Even in well-managed data collections, an average of five percent of data records are still redundant, with the exception of intentional duplicates such as different billing and shipping addresses. The duplicates must be tracked down in the sense that the relevant data record is found in a duplicate check and fuzzy search. This is the only way to clearly identify the correct business partner in case of doubt.

It is advisable to counteract the occurrence of duplicates as soon as they are entered so that the entire database does not have to be searched through and cleaned up later. Ideally, the duplicate check should be performed close to the dialog in the business partner transaction. However, checking in the address search body in SAP is also an option: Here, Hana Fuzzy Search can first search for and match existing data directly in the background when new data is entered. However, a rather extensive list of possible candidates of duplicate or multiple data records is created.

But which data records, i.e. which business partner, are correct and relevant in case of doubt? Manual post-processing of the proposal list is necessary. In the case of large databases, this can involve hundreds or even several thousand data records - hardly efficient for the clerks to manage, let alone identify the right business partner.

Hana Fuzzy Search

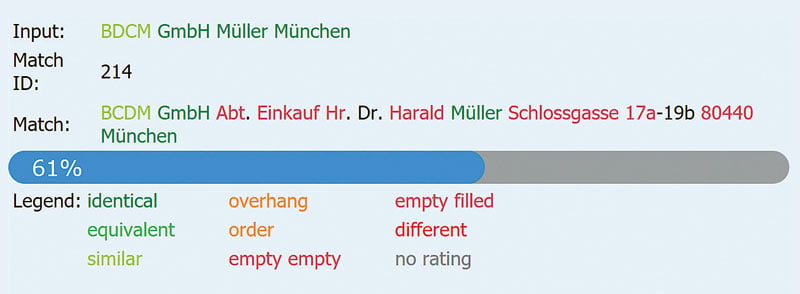

In the environment of S/4 or the Hana database under SAP ERP or SAP CRM, an additional intelligent identity solution integrated in SAP, such as from Uniserv, can therefore help as an add-on to narrow down the candidate list of the Hana fuzzy search to the most relevant hits. In this way, about 90 percent of irrelevant duplicates are sorted out. During the duplicate check, i.e., the creation or modification of a data record, as well as during the fuzzy search with searching, opening, evaluating and selecting the business partner, a manageable quantity of relevant data records remains at the end, which can finally be processed manually in an efficient manner. The final decision as to which data record is the right one, i.e. the relevant one, can be made quickly.

Special features for identification: While a few arguments of a person or company are entered in the search window for a fuzzy search, various elements, such as first and last name, street, house number, postal code and city are entered in a structured manner for the creation or modification of a data record during the duplicate check. Accordingly, special identification options must be mastered. After reduction, around ten percent of hits remain, for which a score indicates the aggregated probability of whether a data record is a duplicate or already represents the correct data record. The higher the score, the more likely it is a duplicate. The evaluation is based on predefined tokens, i.e. certain segments or components that are checked during the search using country-specific knowledge bases and an underlying algorithm.

The tokens can be viewed by the user, so the evaluation is transparent. In expert mode, users can see which tokens have been rated and how. This explains the classification of the relevance of a data set and helps employees to identify the correct, relevant data set. It is therefore advisable to rely on additional technology besides Hana Fuzzy Search, which narrows down the list of results to such an extent that business partners can be correctly identified in a time-saving and efficient manner. The rule is: relevance before quantity. This helps companies enormously in preventing new duplicates or multiple data records from arising in the first place.

Above all, the half of companies mentioned at the beginning, which work in parallel on different versions of SAP, run the risk of carrying duplicates as proverbial ballast in their data stock. They should therefore perform a duplicate check and overall data quality assurance before the complete changeover. And during subsequent operation, they should also keep a close eye on duplicate and multiple data records so that no new duplicates contaminate the system as "false fifties" in the long run.

Search parameters

Synonym search: The names "Steffi" and "Stefanie" are displayed as potentially belonging together.

Overhang search: Double names are also found by entering the single name, for example "Heinz Müller-Schulze" for "Heinz Müller".

Typo: Duplicate recognized despite incorrect entry, for example with "Stehpan" and "Stephan".

Acronym Resolution: Abbreviations are recognized, such as "TK" for "Techniker Krankenkasse".

Zip code equivalency: Zip codes that are geographically close to each other can be evaluated accordingly.