Apache Storm versus SAP Smart Data Streaming

![[shutterstock.com:294081374, Sergey Nivens]](https://e3mag.com/wp-content/uploads/2017/07/Sergey-Nivens_294081374.jpg)

Data determines the pulse of companies. The slower and the less data flows, the less efficient it is, for example, to build customer loyalty, optimize machine control or respond to emergencies. Because without data flows, real-time analysis, with the help of which optimizations, recommendations and decisions can be made, falls by the wayside.

Counterparty architectures

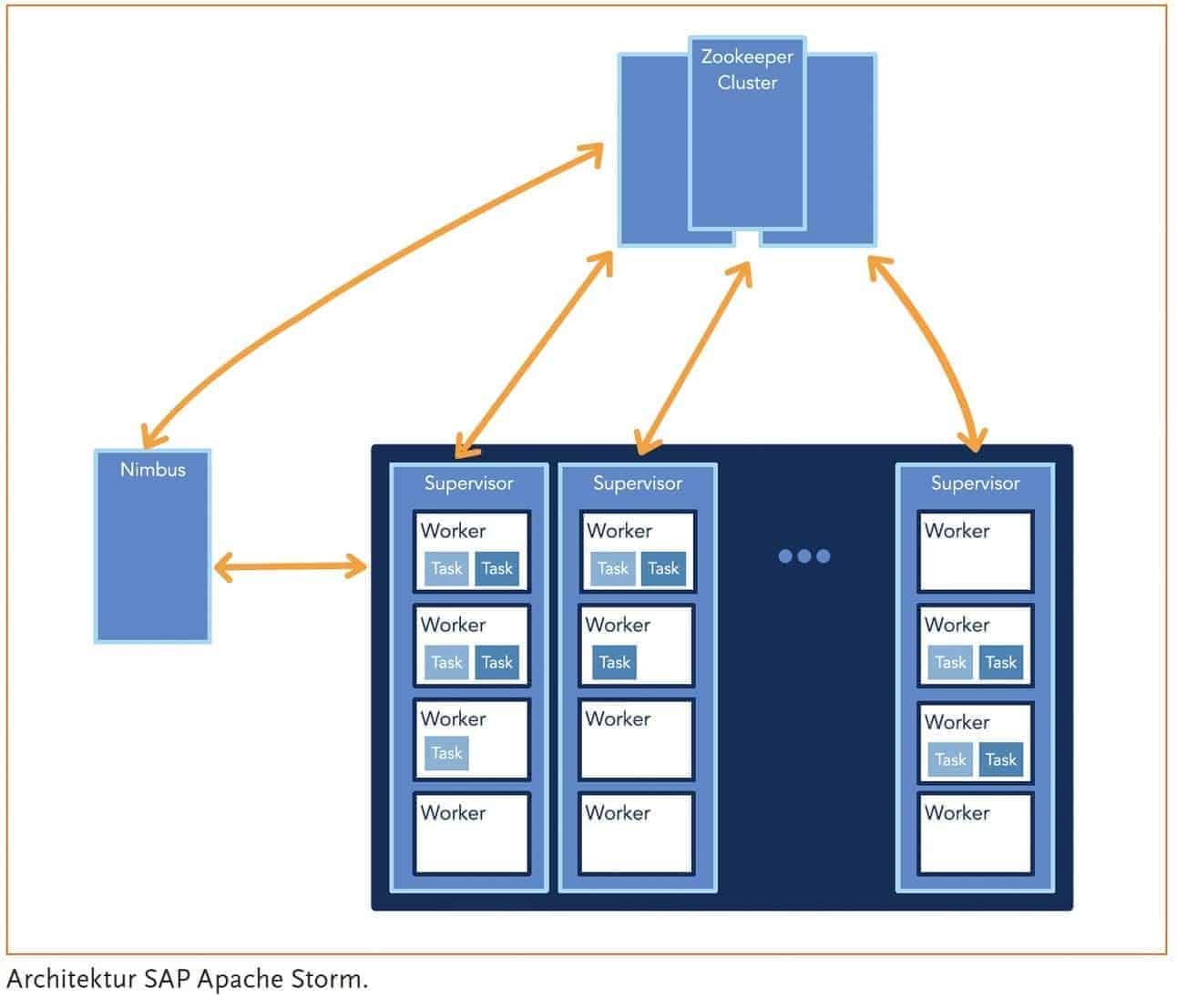

One of the most important streaming solutions is the solution called Storm, which was conceived by Nathan Marz and has since been transformed into an Apache project. This enables guaranteed, robust, distributed and fault-tolerant processing of real-time data in dynamically definable topologies.

The basis of this platform is dedicated hardware resources for a Zookeeper cluster, the Nimbus and the Supervisors. The Zookeeper cluster takes care of the distributed configuration of the individual systems and is now a fixed and solid component of many open source projects, such as Apache Hadoop.

The Nimbus takes care of the distribution of the individual topologies within the cluster and thus the provision of the project code and the required resources.

A topology thereby orchestrates different data source objects (spouts) and the data processing objects (bolts) through a directed flow graph. The nimbus itself is stateless and can be restarted without problems.

A topology thereby orchestrates different data source objects (spouts) and the data processing objects (bolts) through a directed flow graph. The nimbus itself is stateless and can be restarted without problems.

The supervisors host the individual workers. Each worker can run one or more executors of a topology in a JVM. Supervisors can be added or removed at any time at platform runtime.

In version 1.0 of Apache Storm, a Pacemaker server can now also be defined so that the Zookeeper cluster is not overloaded and network communication is deliberately kept small.

To roll out a topology only once, it is possible to use a distributed cache. For example, configuration files can be adapted at runtime without having to restart the topology.

The streaming data can be calculated using windows (windows) that can be defined in length and interval. This allows, for example, classic streaming scenarios, such as the top trends in social news, to be calculated.

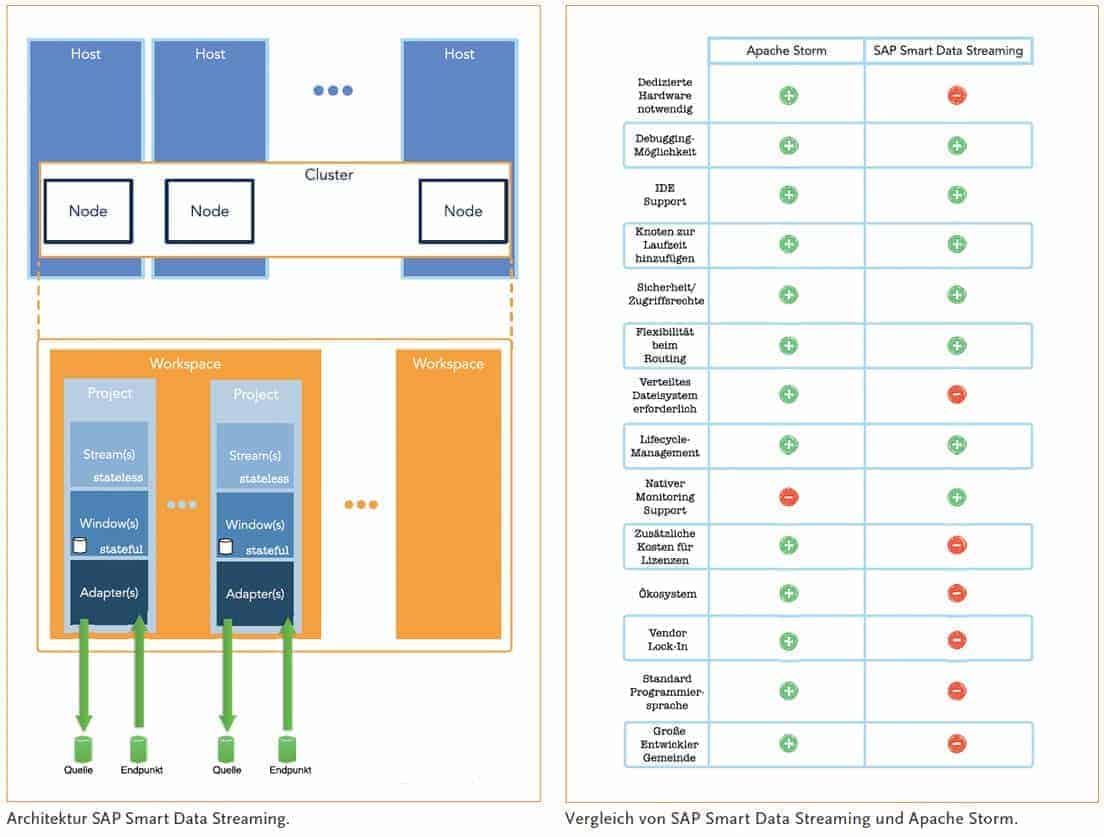

SAP Smart Data Streaming consists of the Streaming Server and a Streaming Cluster at the lowest architectural level. Each of the components must be installed on dedicated hardware.

A streaming cluster is divided into one or more nodes (clients), which represent the runtime environment of the streaming projects. Additional nodes can be added at runtime as desired. Once the cluster is installed and configured, a project can be created and executed within a workspace.

One or more workspaces can be operated on a cluster, for example to ensure content separation of projects. This is because a workspace defines a namespace in the current project and also allows access rights to be defined.

This automatically results in different workspaces due to different access rights. Projects are the smallest unit that can be executed on the cluster, which allows the business logic to be defined. This is done in a project via the components stream, window and adapter.

All three components can be used in singular or multiple. Stream and Window are the processing unit for the incoming data. The data flowing into the system can be transformed, enriched, or aggregated using the stateless streams or the stateful windows.

To have the state ready at any time, Windows uses a policy-based database storage. Using the policies, a maximum number of rows or a time limit can be defined to keep the data ready.

For example, data flowing into the system can be stored for five minutes, allowing, for example, a deviation in operating temperatures or in the pressure of a machine to be identified.

The realization of the cache is defined as a rolling window by default. This can be converted however also by keyword to a jumping window. This would result in the data being automatically deleted after the defined time or line interval and no longer continuously overwritten on a rolling basis.

The adapters within a project connect streams and windows to data sources and data endpoints. Adapters can accept information from events without another operation or perform one of the following actions: insert, update, delete, upset, safedelete.

This enables the connection of a wide variety of data sources and endpoints in different operating modes. It should be noted that streams cannot modify previous data sets due to their definition.

Language skills

Apache Storm is based on Java and Python and natively provides access to the full language capabilities of Java. It is also possible to execute code of any other language using Distributed RPC. A popular example of integrating other languages into pure Java development is the use of Python, for example, when using libraries from the field of machine learning.

Here, the Python program is then called directly from the Java source code and the data bags are passed directly. The Python application then performs the calculations and returns the result back to the calling Java program, and thus the flow of the topology is not interrupted.

SAP Smart Data Streaming uses its own language to program the streaming application. The event-based Continuous Computation Language (CCL) is based on SQL syntax and can be extended using CCL scripts.

To stick with the SQL analogy, CCL can be thought of as an infinitely repeated and dynamic execution of SQL queries, with execution stimulated by events in the data streams. The CCL scripts allow developers to write custom functions and operators.

The syntax of the scripts is similar to the C programming language. All streaming projects are written in CCL and then compiled and stored as executable CCX files in the streaming server.

A classic example of the use of CCL scripts is the definition of flexible operators. These allow the creation of custom dictionaries. It would then be possible, for example, to determine the time span between two user-defined events.

A nice feature is the debugging capability, which allows controlled test data to be played into the system from a date to individual cached streams.

Definition of the projects

Apache Storm's topology generation is based on a Java class that assembles the necessary components using different groupings (routing variations). These groupings regulate the flow of data and preserve information during the processing steps.

In SAP Smart Data Streaming, the projects are created visually and the individual elements are enriched with logic. The main part of the modeling of the streaming project in SAP Smart Data Streaming is done using SQL-like syntax.

Freedom and bond: ecosystems

Streaming data alone does not make an application. Therefore, a streaming platform also lives from its ecosystem. In the case of Apache Storm, this is the entire zoo of the Apache family and, in addition, everything that can be addressed in some way with Java. This means that the flexibility is very high and the integration capability is also immense.

In the case of SAP's opponent, the situation looks somewhat more limited in principle, since the platform is primarily aimed at SAP's own ecosystem. However, with SAP Smart Data Streaming Lite, SAP Hana and, for example, Siemens MindSphere, many application areas of a company are already covered.

An interesting feature is the SAP Smart Data Streaming Lite version, which makes it possible to outsource the preprocessing of the data to an embedded device. SAP is thus also proactively extending the streaming platform to the IoT environment.

Suitability in the corporate environment

Both representatives of the streaming platform are suitable for use in the enterprise environment. All issues regarding security, scalability & resource management, support, development status of the project ("open source"), service level agreements, integration with existing systems and maintainability can be mastered by both representatives without any problems.

SAP knows its way around the enterprise environment and its experience covers many scenarios and needs. With Apache Storm, it's the other way around. The software originates from the open source environment and has evolved into the enterprise landscape.

With the recent release 1.0, the necessary maturity of the project is also available. With Kerberos support, nothing stands in the way of secure use in a corporate context.

Also the often mentioned weakness of the nimbus as a single point of failure can be overcome with the appropriate strategy (example: Hortonworks Blog) can be bypassed.

View and recommendation

Companies that want to implement a streaming solution in-house, for example in the areas of Industry 4.0, telematics, healthcare, e-commerce, can rely on both SAP Smart Data Streaming and Apache Storm. Both technologies can perform streaming tasks.

The SAP solution is negatively impacted by the need for dedicated hardware, the need for a distributed file system, the additional cost of licenses, the fact that the platform has so far been geared only to the SAP ecosystem, the need for an additional learning curve for CCL, and the lack of a large developer community.

In contrast, Apache Storm only lacks native monitoring support, which is mitigated by the extensive ecosystem. Thus, it remains to be said that SAP has implemented a good solution, which seems very complex at the beginning, but can be learned. However, the open source version is more open, flexible and cost-effective.